A Filmmaker’s Guide to Creating Holograms

With Stereoscopy’s demise, are Holograms the way forward to true 3D? If so, how can you get involved? Let’s find out.

Those who proclaim that three-dimensional and depth-based video is the future of motion often have vested interests. However, they may be right, as more efficient compression techniques and faster pipelines like 5G are preparing the ground for data-heavy 360˚video. In a way, holograms are a natural progression.

Volumetrics is also different from motion or performance capture, although both techniques often work together and rely on depth measurements.

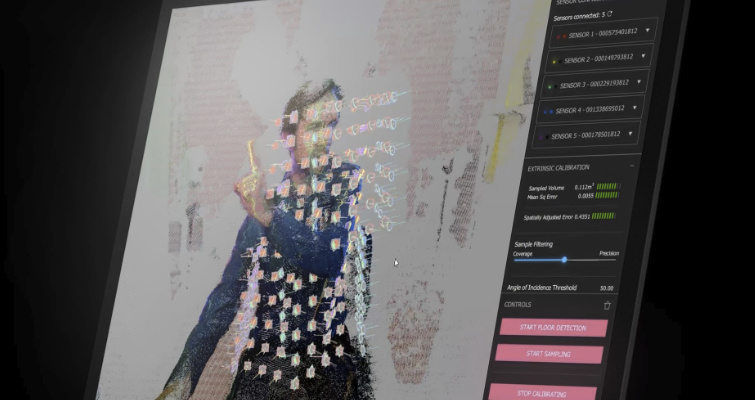

Simply put, MoCap is a capture of movement with sensors worn within a suit. Volumetric captures everything within a physical area and produces 3D information through what is called a point cloud or 3D polygon mesh. The data from both is then passed to VFX studios to manipulate and render.

However, there are some logistical downsides. Data rates were at least doubled with the old stereoscopy (two channels). Higher frame rate cinematography—where you were sometimes shooting in 3D—meant even more data and more efficient storage plans.

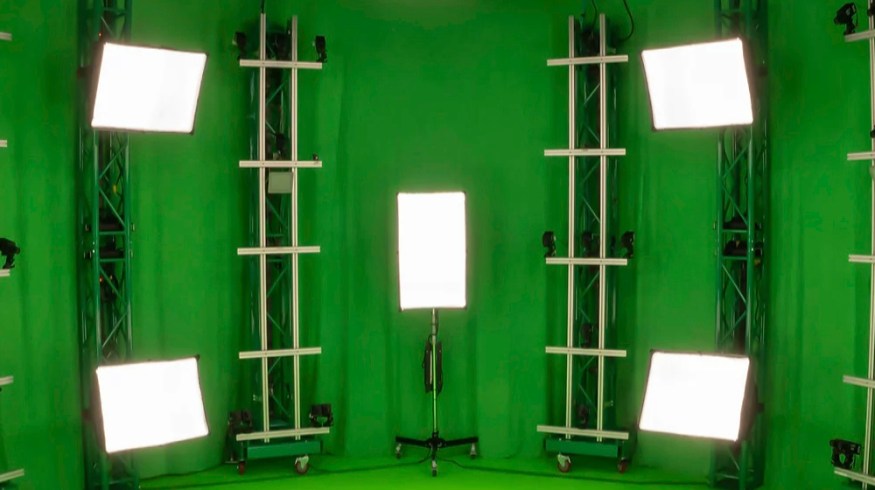

Volumetric video is all about immersion, and that needs multiple points of capture—usually over 100 cameras, infrared, and RGB, are used to create a volume.

As a result, Volumetric video capture can rack up around 16GB of data a minute before editing anything.

Where Volumetric Video Wins

As mentioned in our introduction to holograms, the entertainment industry’s distribution of this new form of 3D isn’t yet sustained. Product holograms will be huge, especially for smartphones and other portable devices.

For instance, in 2017, Barbie presented a holographic robotic doll that responds to voice commands. In the same year, Verizon and Korea Telecom made the first holographic call using 5G technology. To make the call possible, two holograms were formed. But, many industries understand the need for sensors that reflect depth; it’s like the missing ingredient, making them more intelligent and more helpful to humans.

But, in the media world, volumetrics also promise to add efficiencies to post-production channels by simplifying the process of capturing and animating. An actor’s likeness, authentic performance, and wardrobe can all be captured within the same process. Captured data can be used for both Previs and high res delivery. Captured performance can also be used within real-time engines such as Unreal and Unity.

A producer of hologram editing and encoding software, Arcturus has compared costs between volumetric capture and more traditional VFX CG production. Their research compares costs, time, and staffing levels between VFX production and volumetric video production.

For instance: For character creation, using traditional CG could take between 10 and 60 days for the bigger movies. Volumetric videos can create human assets in under 24 hours. Costs incurred for CG could be up to $70,000, whereas volumetric video for the same operation could be $15,000.

Team sizes are different, too, with 10-20 CG artists working on the same assets, while volumetric video capture would only take 2-3 people to produce assets. That leads to the process, which for CG is usually long as it’s manual, whereas volumetrics is essentially an automated exercise.

How to Start with Volumetric Video

You may have already experienced some volumetric video without even knowing. For those of us who were and are XBOX gamers, Microsoft, in 2010, brought out an accessory called Kinect. The idea is that your gestures or body movement would free you from a physical controller. The camera sensors, including infrared detectors, produced a basic depth point cloud representing a 20-point skeleton.

It was revolutionary, at the time, but never seemed to catch on, and its use was abandoned for gaming by Microsoft in 2017.

However, the technology is now sold as part of Azure Kinect DK, which uses depth-sensing tech for various industries like health, manufacturing, and retail. Their development kit is available for around $400 and offers a 1MP depth camera, 360˚ microphone array, 12MP RGB camera, and orientation sensor.

As measuring depth becomes more critical to all sensors, there will be thousands of use cases, which is where Microsoft is headed with this product.

An example or use case is a car rental company in the US that uses depth cameras to scan returning cars to check for any body damage. The vehicles are scanned upon returned, and depth information will show any deformities or dents. This quick, automatic process doesn’t have to take the renter’s word for additional bumps while being rented.

Companies like EF EVE can take the output of multiple Azure Kinects and produce volumetric video. It’s a new service industry for companies new to volumetric video and hasn’t the budget for a numerous camera volume space.

Other cameras like RealSense from Intel also service the growing need for depth sensors. Use cases are anything from robotics to facial authorization. They’re even preparing for robot to human interaction using skeletal movement and gesturing. “People tracking” looks at more general use cases like crowd monitoring and analysis.

If you want to start experimenting with volumetric video, you need to have some depth sensor, but not as essential as Kinect was. The public didn’t really know LiDAR until Apple decided to put a sensor in their iPhones from the twelve models onward.

LiDAR is a laser scanning method used to measure and record landscapes or buildings to create 3D scans and depth maps.

LiDAR produces 3D point clouds, which accounts for every point in space in a scene or object. If you compare LiDAR’s pixels to 2D pixels, the depth sensor adds the Z depth information to the X and Y coordinates.

Companies like Depthkit offer software that will help you capture your LiDAR iPhone or even a better camera using a separate depth sensor.

Here’s an informative tutorial on how to do it. Below this video are some examples from Depthkit’s clients.

Performance capture through sensors worn on the body, 360˚ cameras give you some sense of depth but now seem a bit old-fashioned.

However, there are other ways to produce depth for your projects, like Photogrammetry, for instance. Multiple photos of a subject are taken from various angles and stitched together by computational algorithms to generate 3D models.

Lightfield cameras also use micro-lenses to then manipulate everything from focal points to lighting.

The Future of Volumetrics

Many of us are happy watching or creating in 2D. Stereo cinematography never really succeeded, and there were good reasons why. Maybe we’re okay with the flat video we’ve had for 100 years. But, the fact that volumetric video isn’t reliant on the entertainment industry for its success will give the creative industry time to adjust.

As we see more of what virtual production can produce, especially with game engines, then these new types of holograms will join the virtual revolution.

Perhaps wait in the wings until they’re ready.

For more on cutting edge technology, take a look at these articles:

- Best FREE Animation Software — Ready to Download Right Now

- Review: Razer Seiren BT

- 14 Tips for Faster Rendering in After Effects

- ZBrush Added to Maxon One Subscription

- How to Create a Holographic Sticker Animation in After Effects

Cover image via Avatar Dimension.