NAB 2022: Skyglass App for Virtual Production

Skyglass’ App uses iPhone’s LiDAR sensor for real-time virtual production. Let’s get into the nuts and bolts of this new filmmaking prospect.

NAB is so big that sometimes you can discover one-man-band companies that have managed to get a booth for next to no money right at the last minute, and sometimes they’re the most interesting. The main problem is finding them in the cavernous halls of this enormous event.

This year, the first NAB in three years, more than 900 companies attended, including around 160 first-timers. If you haven’t ever been to NAB, there are halls—like the North Hall, for instance—that are genuinely massive.

Companies like Blackmagic Design and Adobe are in this hall, designated Create within NAB’s new way of streamlining topics. There are also halls that are categorized as Capitalize, Connect, and Intelligent Content.

The bigger booths are weighted towards the front of the hall and not the car parks behind. This means that walking towards the back, the booths start to thin out and start getting much smaller. It’s here you can discover some exciting, albeit fledgling, technology.

Ryan Burgoyne’s Skyglass is one of those. Ryan used to work for Apple. He worked there for six years as a senior engineer on the team that built Reality Composer, a tool to make it easy for developers to build AR apps.

He founded Skyglass in November 2021 to make virtual production techniques accessible to independent filmmakers and small studios.

What Is Skyglass?

Ryan’s idea was to develop an app and a cloud rendering service that would appeal to content creators that couldn’t afford a virtual set, or expensive cameras and their tracking. He wanted to use the LiDAR sensor introduced to the iPhone from iPhone 12.

He describes his vision,

Skyglass is a virtual production app that makes it easy for students, indie filmmakers, and small studios to add visual effects (VFX) to their shots right on set. Using the sensors built into your iPhone and cloud rendering powered by Unreal Engine, Skyglass places your shot in a photorealistic virtual environment.

– Ryan Burgoyne

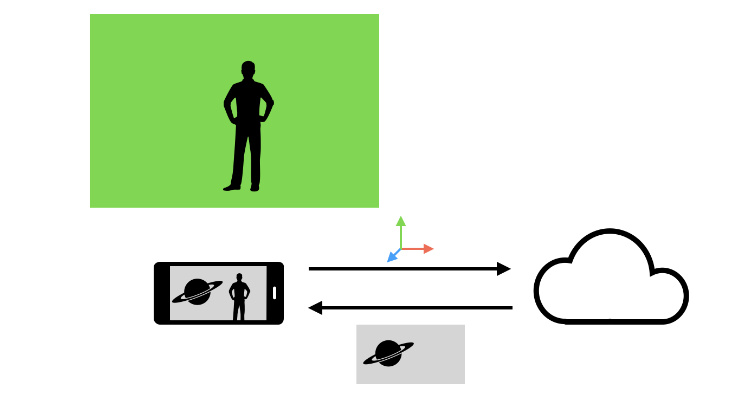

His idea was to use LiDAR, which measures the journey of light to and from an object and positions that object virtually. His app would then take the data from LiDAR and send it to the cloud, where Unreal Engine would render it with the camera’s perspective allowed by LiDAR. That render is then streamed to the phone for real-time compositing with live footage.

In effect, Skyglass is tracking the position and orientation of your phone’s camera and streaming it to the cloud, where a render of a virtual environment is created and sent back.

What Do You Need?

Skyglass says that all you need to get started with real-time VFX is an iPhone running the Skyglass app and an internet connection (a download speed of at least 10 Mbps is recommended). Skyglass requires the LiDAR scanner available on iPhone 12 Pro, 12 Pro Max, 13 Pro, or 13 Pro Max.

Skyglass includes a library of virtual environments to choose from to widen the service. You can even create your virtual environment by creating an Asset Pack and uploading it to Skyglass Cloud or Skyglass Server.

Skyglass has its own Unreal Engine plug-in called Forge for you to create an Asset Pack, or you can import 3D models you’ve made or use the Unreal Asset Marketplace.

If you want to fine-tune your composite, you can download just the background plates from Skyglass Cloud or Skyglass Server and your green-screen footage from your iPhone and import them into editors like Nuke, Fusion, After Effects, etc.

Using Other Cameras

There are ways to use better cameras than recently launched iPhones for Skyglass. But this entails calibrating offsets between the iPhone and cine camera sensors, for instance, via an NDI network. It seems pretty complicated but allows the rendering of focus, iris, and zoom metadata of native lenses to match your captured footage.

Hopefully, we can find out more about Skyglass from Ryan and try the app out for ourselves. If you’re at NAB, find him in the North Hall at booth 1138 or check his website at www.skyglass.com/.

For more on NAB 2022, check out these articles:

- NAB 2022: Aputure Tease with the MC Pro

- NAB 2022: Aputure’s New Full Color Point-Source 600c Pro

- NAB 2022: DZOFILM Add 14-35mm Pictor Zoom To Their Cine Zoom Lens Line-Up

- NAB 2022: Can Bosma Succeed with Their New 8K Cameras?

- NAB 2022: The Electronic Dolly Runs Free at Last

Cover image via Skyglass.